Checkpoints

Create cluster and deploy an app

/ 40

Migrate to an Optimized Nodepool

/ 20

Apply a Frontend Update

/ 20

Autoscale from Estimated Traffic

/ 20

Optimize Costs for Google Kubernetes Engine: Challenge Lab

GSP343

Introduction

In a challenge lab you’re given a scenario and a set of tasks. Instead of following step-by-step instructions, you will use the skills learned from the labs in the course to figure out how to complete the tasks on your own! An automated scoring system (shown on this page) will provide feedback on whether you have completed your tasks correctly.

When you take a challenge lab, you will not be taught new Google Cloud concepts. You are expected to extend your learned skills, like changing default values and reading and researching error messages to fix your own mistakes.

To score 100% you must successfully complete all tasks within the time period!

This lab is only recommended for students who are enrolled in the Optimize Costs for Google Kubernetes Engine course. Are you ready for the challenge?

Setup and requirements

Before you click the Start Lab button

Read these instructions. Labs are timed and you cannot pause them. The timer, which starts when you click Start Lab, shows how long Google Cloud resources will be made available to you.

This hands-on lab lets you do the lab activities yourself in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials that you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

- Time to complete the lab---remember, once you start, you cannot pause a lab.

Challenge scenario

You are the lead Google Kubernetes Engine admin on a team that manages the online shop for OnlineBoutique.

You are ready to deploy your team's site to Google Kubernetes Engine but you are still looking for ways to make sure that you're able to keep costs down and performance up.

You will be responsible for deploying the OnlineBoutique app to GKE and making some configuration changes that have been recommended for cost optimization.

Here are some guidelines you've been requested to follow when deploying:

- Create the cluster in the

zone. - The naming scheme is team-resource-number, e.g. a cluster could be named

. - For your initial cluster, start with machine size

e2-standard-2 (2 vCPU, 8G memory). - Set your cluster to use the rapid

release-channel.

Task 1. Create a cluster and deploy your app

-

Before you can deploy the application, you'll need to create a cluster and name it as

. -

Start small and make a zonal cluster with only two (2) nodes.

-

Before you deploy the shop, make sure to set up some namespaces to separate resources on your cluster in accordance with the 2 environments -

devandprod. -

After that, deploy the application to the

devnamespace with the following command:

Click Check my progress to verify the objective.

Task 2. Migrate to an optimized node pool

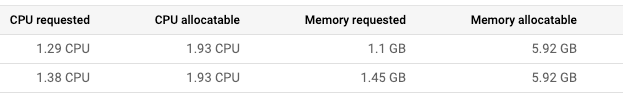

- After successfully deploying the app to the dev namespace, take a look at the node details:

You come to the conclusion that you should make changes to the cluster's node pool:

- There's plenty of left over RAM from the current deployments so you should be able to use a node pool with machines that offer less RAM.

- Most of the deployments that you might consider increasing the replica count of will require only 100mcpu per additional pod. You could potentially use a node pool with less total CPU if you configure it to use smaller machines. However, you also need to consider how many deployments will need to scale, and how much they need to scale by.

-

Create a new node pool named

with custom-2-3584 as the machine type. -

Set the number of nodes to 2.

-

Once the new node pool is set up, migrate your application's deployments to the new nodepool by cordoning off and draining

default-pool. -

Delete the default-pool once the deployments have safely migrated.

Click Check my progress to verify the objective.

Task 3. Apply a frontend update

You just got it all deployed, and now the dev team wants you to push a last-minute update before the upcoming release! That's ok. You know this can be done without the need to cause down time.

-

Set a pod disruption budget for your frontend deployment.

-

Name it onlineboutique-frontend-pdb.

-

Set the min-availability of your deployment to 1.

Now, you can apply your team's update. They've changed the file used for the home page's banner and provided you an updated docker image:

-

Edit your frontend deployment and change its image to the updated one.

-

While editing your deployment, change the ImagePullPolicy to Always.

Click Check my progress to verify the objective.

Task 4. Autoscale from estimated traffic

A marketing campaign is coming up that will cause a traffic surge on the OnlineBoutique shop. Normally, you would spin up extra resources in advance to handle the estimated traffic spike. However, if the traffic spike is larger than anticipated, you may get woken up in the middle of the night to spin up more resources to handle the load.

You also want to avoid running extra resources for any longer than necessary. To both lower costs and save yourself a potential headache, you can configure the Kubernetes deployments to scale automatically when the load begins to spike.

-

Apply horizontal pod autoscaling to your frontend deployment in order to handle the traffic surge.

-

Scale based on a target cpu percentage of 50.

-

Set the pod scaling between 1 minimum and

maximum.

Of course, you want to make sure that users won’t experience downtime while the deployment is scaling.

-

To make sure the scaling action occurs without downtime, set the deployment to scale with a target cpu percentage of 50%. This should allow plenty of space to handle the load as the autoscaling occurs.

-

Set the deployment to scale between 1 minimum and

maximum pods.

But what if the spike exceeds the compute resources you currently have provisioned? You may need to add additional compute nodes.

-

Next, ensure that your cluster is able to automatically spin up additional compute nodes if necessary. However, handling scaling up isn’t the only case you can handle with autoscaling.

-

Thinking ahead, you configure both a minimum number of nodes, and a maximum number of nodes. This way, the cluster can add nodes when traffic is high, and reduce the number of nodes when traffic is low.

-

Update your cluster autoscaler to scale between 1 node minimum and 6 nodes maximum.

Click Check my progress to verify the objective.

- Lastly, run a load test to simulate the traffic surge.

Fortunately, OnlineBoutique was designed with built-in load generation. Currently, your dev instance is simulating traffic on the store with ~10 concurrent users.

- In order to better replicate the traffic expected for this event, run the load generation from your

loadgeneratorpod with a higher number of concurrent users with this command. Replace YOUR_FRONTEND_EXTERNAL_IP with the IP of the frontend-external service:

- Now, observe your Workloads and monitor how your cluster handles the traffic spike.

You should see your recommendationservice crashing or, at least, heavily struggling from the increased demand.

- Apply horizontal pod autoscaling to your recommendationservice deployment. Scale based off a target cpu percentage of 50 and set the pod scaling between 1 minimum and 5 maximum.

Task 5. (Optional) Optimize other services

While applying horizontal pod autoscaling to your frontend service keeps your application available during the load test, if you monitor your other workloads, you'll notice that some of them are being pushed heavily for certain resources.

If you still have time left in the lab, inspect some of your other workloads and try to optimize them by applying autoscaling towards the proper resource metric.

You can also see if it would be possible to further optimize your resource utilization with Node Auto Provisioning.

Congratulations!

Congratulations! In this lab, you have successfully deployed the OnlineBoutique app to Google Kubernetes Engine and made some configuration changes that have been recommended for cost optimization. You have also applied horizontal pod autoscaling to your frontend and recommendationservice deployments to handle the traffic surge. You have also optimized other services by applying autoscaling towards the proper resource metric.

Earn your next skill badge

This self-paced lab is part of the Optimize Costs for Google Kubernetes Engine skill badge course. Completing this skill badge course earns you the badge above, to recognize your achievement. Share your badge on your resume and social platforms, and announce your accomplishment using #GoogleCloudBadge.

Google Cloud training and certification

...helps you make the most of Google Cloud technologies. Our classes include technical skills and best practices to help you get up to speed quickly and continue your learning journey. We offer fundamental to advanced level training, with on-demand, live, and virtual options to suit your busy schedule. Certifications help you validate and prove your skill and expertise in Google Cloud technologies.

Manual Last Updated March 22, 2024

Lab Last Tested July 27, 2023

Copyright 2024 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.