Checkpoints

Assign roles to service account

/ 10

Set up Service Accounts

/ 15

Set up a new ONTAP storage environment

/ 15

Access the Volume

/ 15

Create NetApp Snapshots

/ 15

Create copy of Snapshots

/ 15

Restore from NetApp Snapshots

/ 15

Protecting Data with NetApp BlueXP & Cloud Volumes ONTAP for Google Cloud

This lab was developed with our partner, NetApp. Your personal information may be shared with NetApp, the lab sponsor, if you have opted-in to receive product updates, announcements, and offers in your Account Profile.

GSP876

Overview

In this lab, you will learn how to implement a full data protection strategy with NetApp Cloud Volumes ONTAP and BlueXP capabilities. You will get practical experience in managing NetApp Snapshots and use them for recovery and cloning purposes. You will also be introduced with Cloud Volumes ONTAP replication engine, allowing you to implement cross-regions & cross-clouds disaster recovery. This lab is derived from BlueXP's documentation published by NetApp.

What you'll learn

In this lab, you will learn how to perform the following tasks:

- Manage NetApp Snapshots for local protection

- Use NetApp Snapshots for recovery and cloning purposes

- Replicate data cross-regions for disaster recovery

- Enable ransomware protection

Prerequisites

This is a fundamental lab and no prior knowledge is required. It is recommended to be familiar with using NetApp BlueXP and knowing how to perform foundational tasks in Google Cloud. The lab, Getting Started with NetApp BlueXP & Cloud Volumes ONTAP for Google Cloud, is a good introduction.

Setup and requirements

Before you click the Start Lab button

Read these instructions. Labs are timed and you cannot pause them. The timer, which starts when you click Start Lab, shows how long Google Cloud resources will be made available to you.

This hands-on lab lets you do the lab activities yourself in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials that you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

- Time to complete the lab---remember, once you start, you cannot pause a lab.

How to start your lab and sign in to the Google Cloud console

-

Click the Start Lab button. If you need to pay for the lab, a pop-up opens for you to select your payment method. On the left is the Lab Details panel with the following:

- The Open Google Cloud console button

- Time remaining

- The temporary credentials that you must use for this lab

- Other information, if needed, to step through this lab

-

Click Open Google Cloud console (or right-click and select Open Link in Incognito Window if you are running the Chrome browser).

The lab spins up resources, and then opens another tab that shows the Sign in page.

Tip: Arrange the tabs in separate windows, side-by-side.

Note: If you see the Choose an account dialog, click Use Another Account. -

If necessary, copy the Username below and paste it into the Sign in dialog.

{{{user_0.username | "Username"}}} You can also find the Username in the Lab Details panel.

-

Click Next.

-

Copy the Password below and paste it into the Welcome dialog.

{{{user_0.password | "Password"}}} You can also find the Password in the Lab Details panel.

-

Click Next.

Important: You must use the credentials the lab provides you. Do not use your Google Cloud account credentials. Note: Using your own Google Cloud account for this lab may incur extra charges. -

Click through the subsequent pages:

- Accept the terms and conditions.

- Do not add recovery options or two-factor authentication (because this is a temporary account).

- Do not sign up for free trials.

After a few moments, the Google Cloud console opens in this tab.

Activate Cloud Shell

Cloud Shell is a virtual machine that is loaded with development tools. It offers a persistent 5GB home directory and runs on the Google Cloud. Cloud Shell provides command-line access to your Google Cloud resources.

- Click Activate Cloud Shell

at the top of the Google Cloud console.

When you are connected, you are already authenticated, and the project is set to your Project_ID,

gcloud is the command-line tool for Google Cloud. It comes pre-installed on Cloud Shell and supports tab-completion.

- (Optional) You can list the active account name with this command:

- Click Authorize.

Output:

- (Optional) You can list the project ID with this command:

Output:

gcloud, in Google Cloud, refer to the gcloud CLI overview guide.

Set up service accounts

- To start, in your Cloud Shell window, run the following commands to set some environment variables:

- Next, run the following commands to assign roles to the pre-created

netapp-cloud-managerservice account:

Click Check my progress to verify that you've performed the above task.

- Lastly, create the

netapp-cvoservice account and assign it the relevant roles:

Click Check my progress to verify that you've performed the above task.

Set up a new ONTAP storage environment

In this section, you will log in to NetApp BlueXP, NetApp’s data lifecycle management platform, and create a new Cloud Volumes ONTAP storage environment on Google Cloud infrastructure.

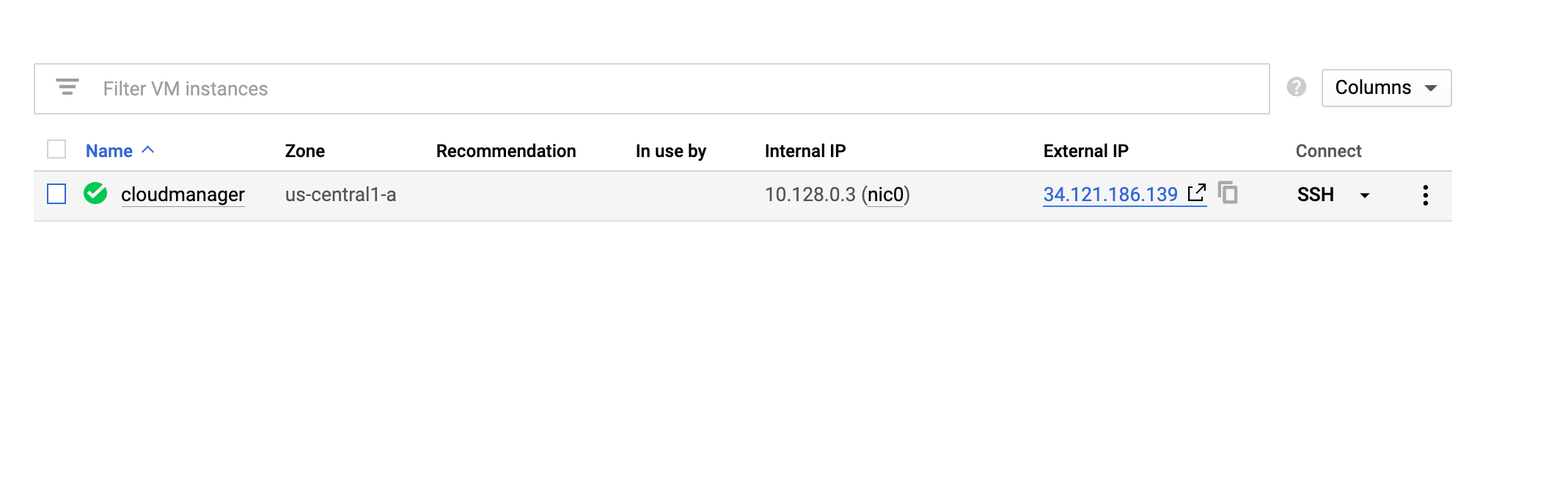

- From the Navigation Menu, go to Compute Engine > VM instances. You should see the

cloudmanagerVM already deployed for you.

- Click the External IP to connect to the VM in a new window. Use

http://<IP Address>/.

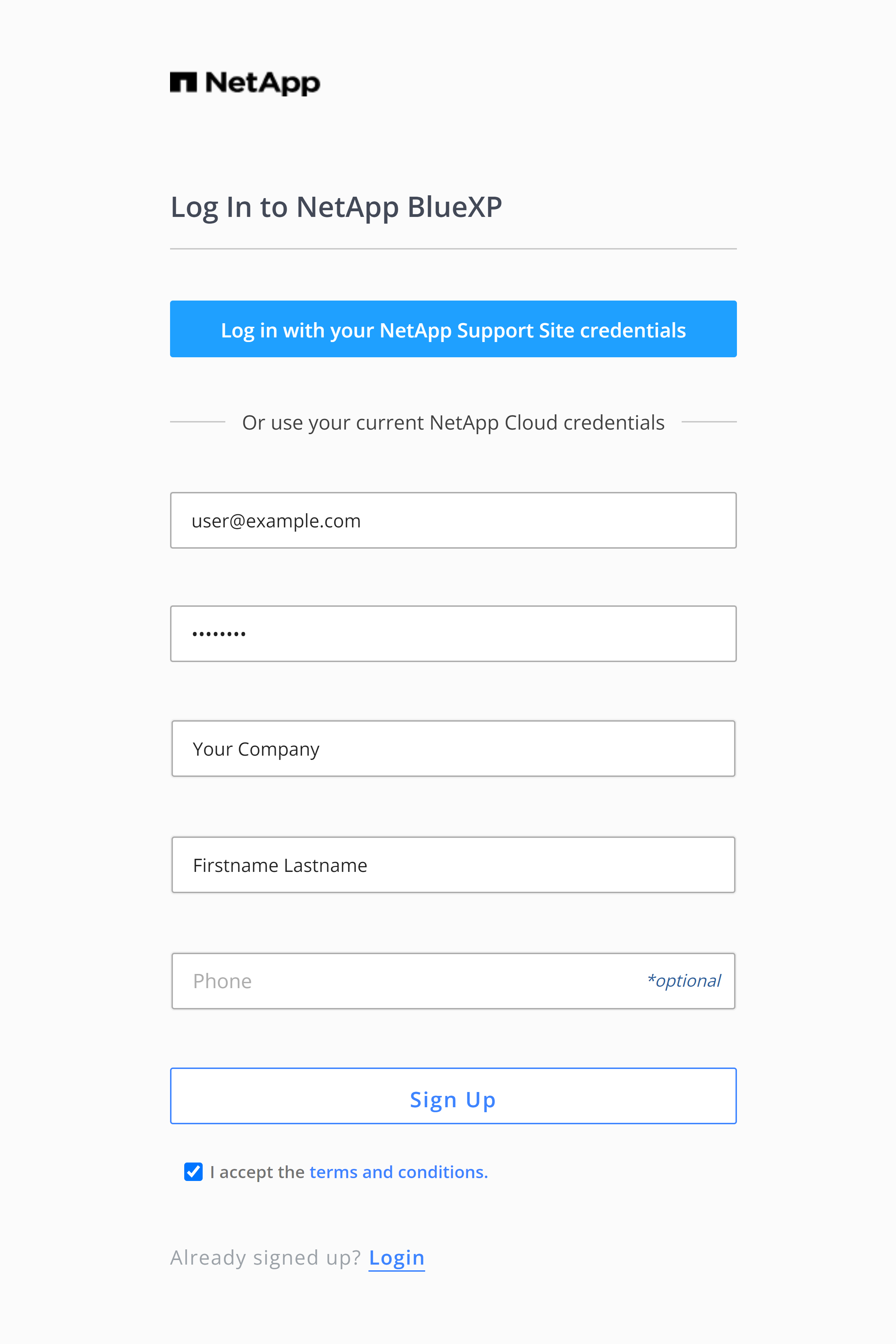

- Once you are on the BlueXP SaaS setup page, you can set up your NetApp Account.

First click Sign Up, then enter in your email address and a password. Fill out the rest of the fields to authenticate yourself with NetApp’s Cloud Central and click Sign Up to log in to BlueXP.

-

Next, you will be prompted to create an Account Name and a BlueXP Name. For these, you can just use

user. Click Let's Start. Click on Lets go to BlueXP. -

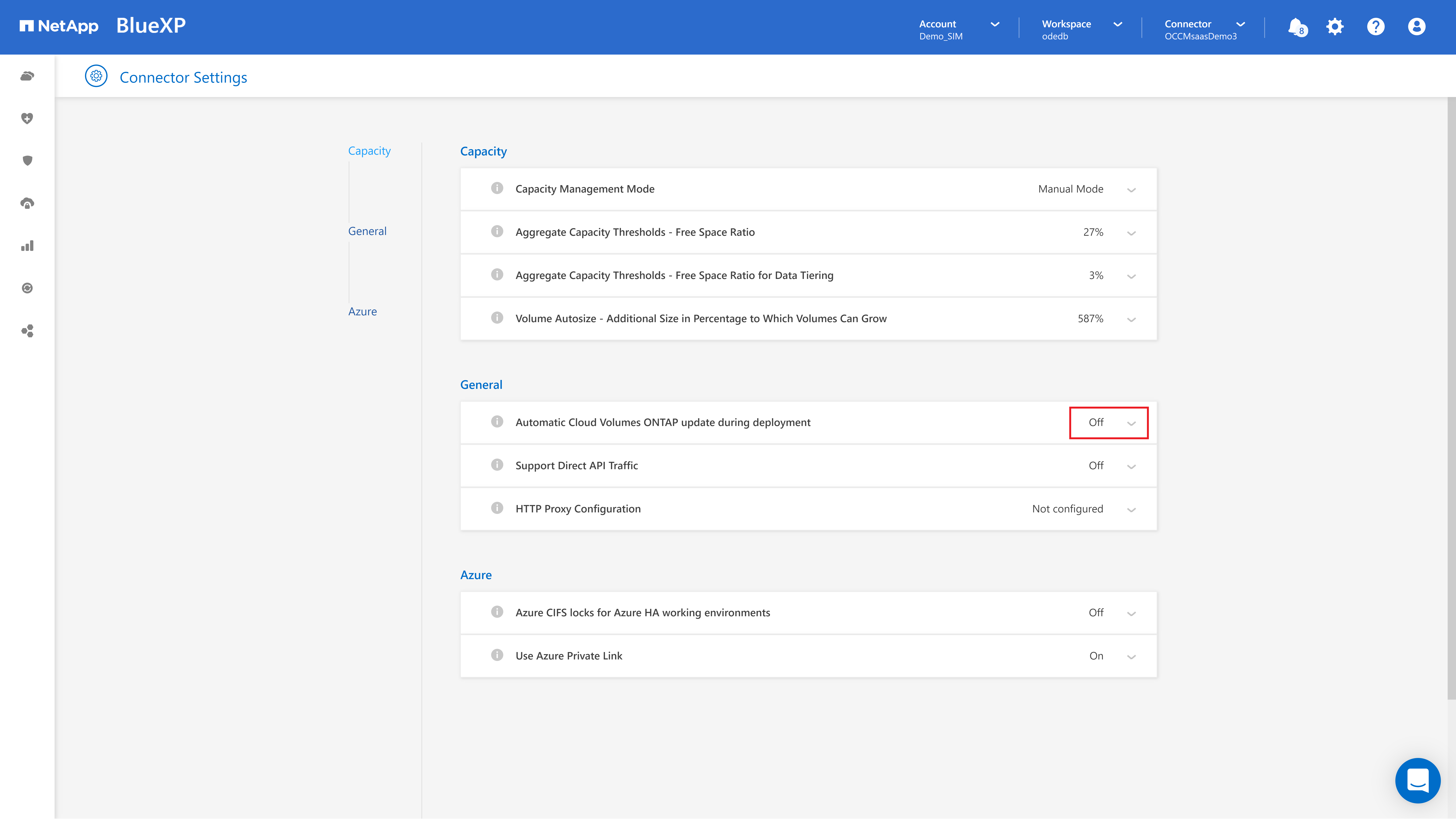

Before you add a working environment, navigate to the gear icon on the top-right of the page then click Connector Settings > General.

-

For Automatic Cloud Volumes ONTAP update during deployment, click the box and then uncheck the Automatically update Cloud Volumes ONTAP box to turn the updates off. Click Save. Your configuration settings should resemble the following:

- Click BlueXP on the top left to navigate to the home page. Click Add Working Environment.

-

Add Working Environment: select Google Cloud Platform.

-

Choose Type: Cloud Volumes ONTAP Single Node and click Add New.

-

Specify a cluster name, optionally add labels, and then specify a password for the default admin account. Click Continue.

netapp-cvo has been pre-created for you with the Storage Admin role.

-

On the Services page, toggle off Backup to Cloud (you will enable it later on the lab) and click Continue.

-

Location & Connectivity: For the GCP Region and GCP Zone, use

and , respectively. For the VPC, Subnet, and Firewall Policy, leave as default. Select the checkbox to confirm network connectivity to Google Cloud storage for data tiering, and click Continue. -

Cloud Volumes ONTAP Charging Methods & NSS Account: Select Freemium (Up to 500 GiB) and click on Continue.

-

Preconfigured Packages: click Change Configuration.

-

On the Licensing section, first click Change version and select

ONTAP-9.7P5 versionfrom the drop down. Click Apply. Next, from the Machine Type dropdown list selectn1-highmem-4. Click Continue. -

Underlying Storage Resources: Choose

Standardand for the Google Cloud Disk Size select100 GB. Click Continue. -

WORM (write once, read many): keep the settings as default and click Continue.

-

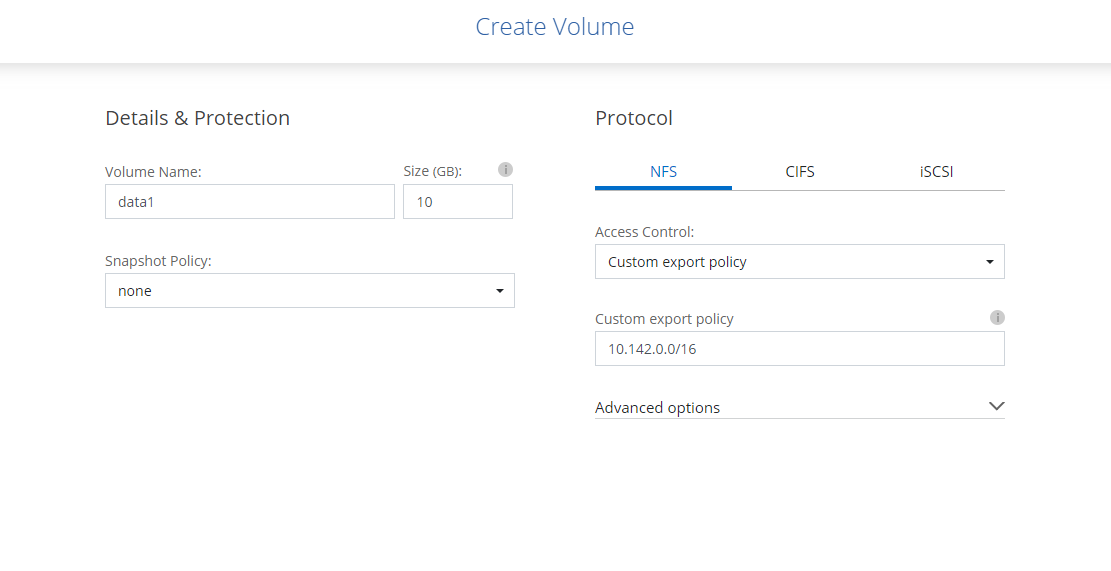

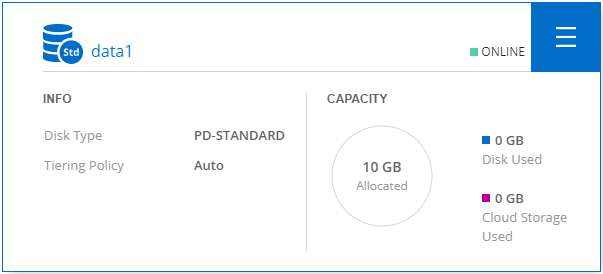

Create Volume: Create a NetApp volume that will be used in the next sections. Use the following details:

- Set Volume Name to

data1 - Set Size to

10(size set in GB) - Set Snapshot Policy to

none - Select NFS as Protocol

- Verify Access Control is set to

Custom export policy - Verify Custom export policy is set with the VPC’s CIDR range

- Click Continue

Your configuration settings should resemble the following:

- Create Volume - Usage Profile Disk Type & Tiering Policy: use the following configurations:

- Verify the Storage Efficiency is marked (to enable NetApp's data footprint reduction technologies).

- Verify the Disk Type is set to Standard (volume will be created in Standard-backed PD).

- Leave Tiering data to object storage with default settings.

- Click Continue.

- Review & Approve: Review and confirm your selections:

- Review details about the configuration.

- Click More information to review details about support and the Google Cloud resources that BlueXP will purchase.

- Select both checkboxes.

- Click Go.

Great! You're done with your working environment setup. Now, sit back while BlueXP deploys your Cloud Volumes ONTAP system and creates a NetApp volume. This should take around ten minutes to complete. In the meantime, you can check out the NetApp documentation for Cloud Volumes ONTAP for Google Cloud.

Click Check my progress to verify that you've performed the above task.

NetApp Snapshots management

In this section, you will learn about NetApp Snapshots and leverage them for instant recovery and cloning. A NetApp Snapshot is a highly efficient, read-only, point-in-time image of a NetApp volume providing it with local protection. NetApp Snapshots do not affect system performance, they are instantly created regardless of the data size in the volume, and only consume storage space when changes are made to existing data. Go to NetApp documentation center for more information.

Access the volume

In this section, set up access to the Cloud Volumes ONTAP volume, created in the previous section, from a Linux VM using the NFS protocol.

- Go back to the Google Cloud Console. Run the following commands in Cloud Shell to reset your

PROJECT_IDenvironment variable and create a newlinux-vminstance:

-

After the VM has been deployed, navigate back to the NetApp BlueXP page and double-click on your Cloud Volumes ONTAP working environment to access the Volumes page.

-

On the Volumes page, locate the data1 volume and click on its triple-bar.

-

From the list of operations revealed, click on Mount Command.

-

Then click on Copy to copy the command to your clipboard.

-

Back in the Cloud Console, from the Navigation Menu, navigate to Compute Engine > VM instances.

-

On the VM instances page, locate the

linux-vminstance and connect to it by clicking on SSH. -

In the linux VM SSH window, create a local directory under

/mntnamed data1 that would serve as a mount point:

- Before mounting, use the following command to install

nfs-common:

- Next, paste the Mount Command (copied on step 2) and replace

<dest_dir>with the/mnt/data1directory created. As well, add-o vers=3to the command to mount with NFS v3. Press ENTER to mount Cloud Volumes ONTAP volume using NFS.

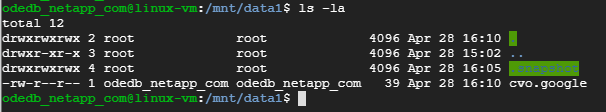

- Verify that your volume is properly mounted using the following native Linux command:

You should see the data1 volume mounted on /mnt/data.

- Finally, create a file with a single line within the mounted volume using the following native Linux command:

Great! You have just mounted the volume created in the previous section, now let's see what these NetApp Snapshots can do.

Click Check my progress to verify that you've performed the above task.

Creating NetApp Snapshots

In each NetApp volume up to 1,023 Snapshots can be retained. Now you'll configure Cloud Volumes ONTAP to automatically create NetApp Snapshots and manually create one.

-

Go back to BlueXP and double click on Cloud Volumes ONTAP working environment to enter the Volumes page.

-

On the Volumes page, locate the data1 volume click on its triple-bar and from the list of operations revealed click on Edit.

-

From the Edit page, set the Snapshot Policy to default. Note the schedules at which NetApp Snapshots are created automatically and the retention for each. Click Update.

-

On the Volumes page, click on the triple-bar of the data1 volume and from the list of operations revealed click on Create a Snapshot copy.

-

On the Create a Snapshot page, leave the Snapshot Copy Name unchanged and click on Create. Note the notification that appears when redirected back to the Volumes page.

Well done! You have just enabled automatic Snapshots creation for your volume (if you stay long enough you can see them accumulating) and created a manual snapshot instantly. Now, see what you can do with it.

Click Check my progress to verify that you've performed the above task.

Restoring from NetApp Snapshots

It's time to use a Snapshot copy to recover data. You can recover individual files and directories, LUNs (when using iSCSI), or recover the entire content of a volume. In this section you will practice file restore through an NFS client as well as volume restore through BlueXP.

- In the linux-vm SSH window, use the following native Linux command to append a line to the file you have previously created:

-

Go back to the Volumes page, click on the triple-bar of the data1 volume and from the list of operations revealed click on Create a Snapshot copy.

-

On the Create a Snapshot page, leave the Snapshot Copy Name unchanged and click on Create.

-

Return to your linux-vm SSH window. List the

/mnt/data1directory including hidden items. Use the following commands:

Your output should look like the following. Note the file you created and the .snapshot directory.

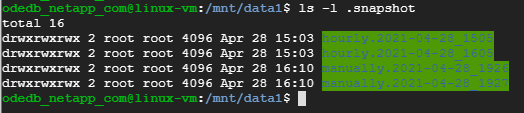

- All the Snapshots created for that volume are accessible (in read-only) through the

.snapshotdirectory. Next, list the volume's Snapshots by running the following command from your linux-vm:

Your output should look similar to this: (note the two manual Snapshots you have created).

- Recover the first version of the

cvo.googlefile by simply copying it from the first manual snapshot you created. Use the followingcpcommand, and replace<timestamp>with the one of the first manual snapshot created. (If typing, use the Tab key for auto-completion).

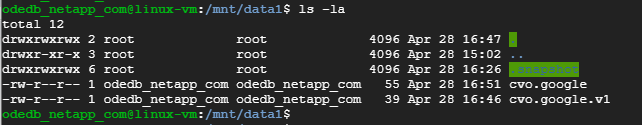

- When the copy is done, list the

/mnt/data1directory including hidden items using the following command:

Your output should look like this:

- You can use the following commands to see the differences between the files:

Restoring single file, checked! Now see how it's done for the whole volume.

Click Check my progress to verify that you've performed the above task.

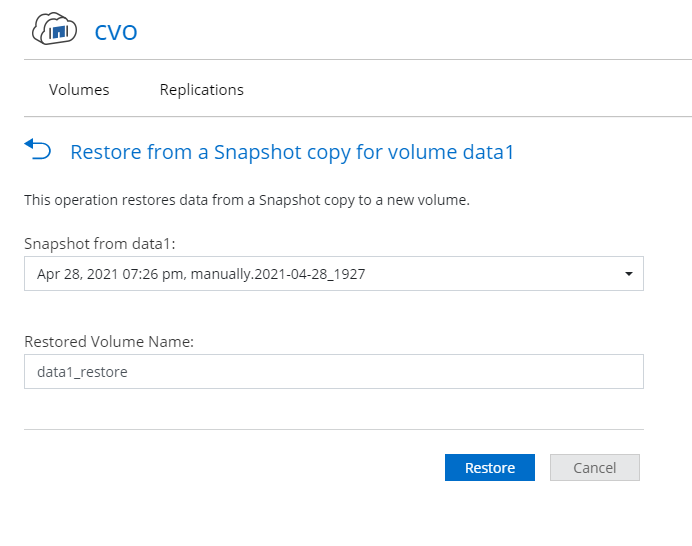

-

Go back to BlueXP and from the Volumes page, click on the triple-bar of the data1 volume. From the list of operations revealed, click on Restore from Snapshot copy.

-

From the Restore from Snapshot copy page, select the last manual snapshot created, use the default Restored Volume Name and click Restore.

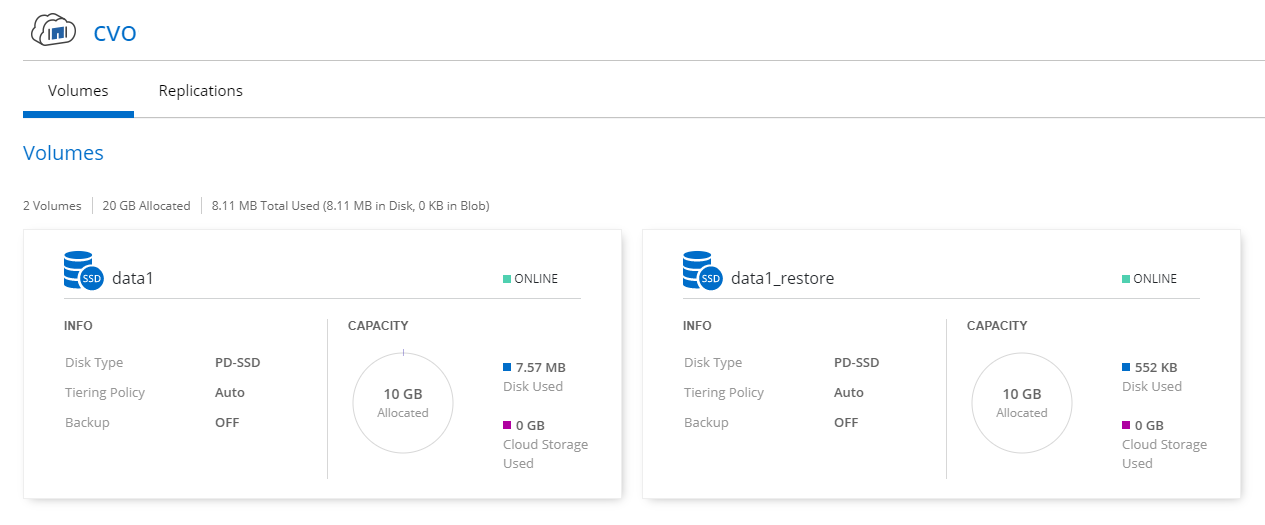

When the restore is completed, the volume's page should look like this:

Restore is instant regardless of the volume's size or used capacity. There's no copying of data, it's all a game of NetApp's WAFL file system pointers. Check out this video to see how it works. Now, access the restored volume.

-

Click on the triple-bar of data1_restore volume. From the list of operations revealed, click on Mount Command and then click on Copy to copy the command to your clipboard.

-

In the linux VM SSH window, create a local directory under

/mntnameddata1_restorethat would serve as a mount point:

- Next, paste the Mount Command (copied on step 10) and replace

<dest_dir>with the/mnt/data1_restoredirectory created, and add-o vers=3to the command to use NFS v3:

- Press ENTER to mount the restored volume.

- Verify that your volume is properly mounted and list its content using the following native Linux commands:

Since snapshot represents a point-in-time and you have recovered the volume to a snapshot created before the single file restore, only the file named cvo.google appears.

Instantly restoring the entire volume, checked! Ready to move on?

Click Check my progress to verify that you've performed the above task.

Cloning from NetApp Snapshots

Snapshots can also be used for creating instant thin clones using Cloud Volumes ONTAP's FlexClone technology. With FlexClone you copy even the largest datasets almost instantaneously. That makes it ideal for situations in which you need multiple copies of identical datasets or temporary copies of a dataset, like when testing your disaster recovery copy (discussed later in Cross-Cloud / Cross-Region Disaster Recovery section). Let's do some cloning!

-

From the Volumes page, click on the triple-bar of the data1 volume. From the list of operations revealed, click on Clone.

-

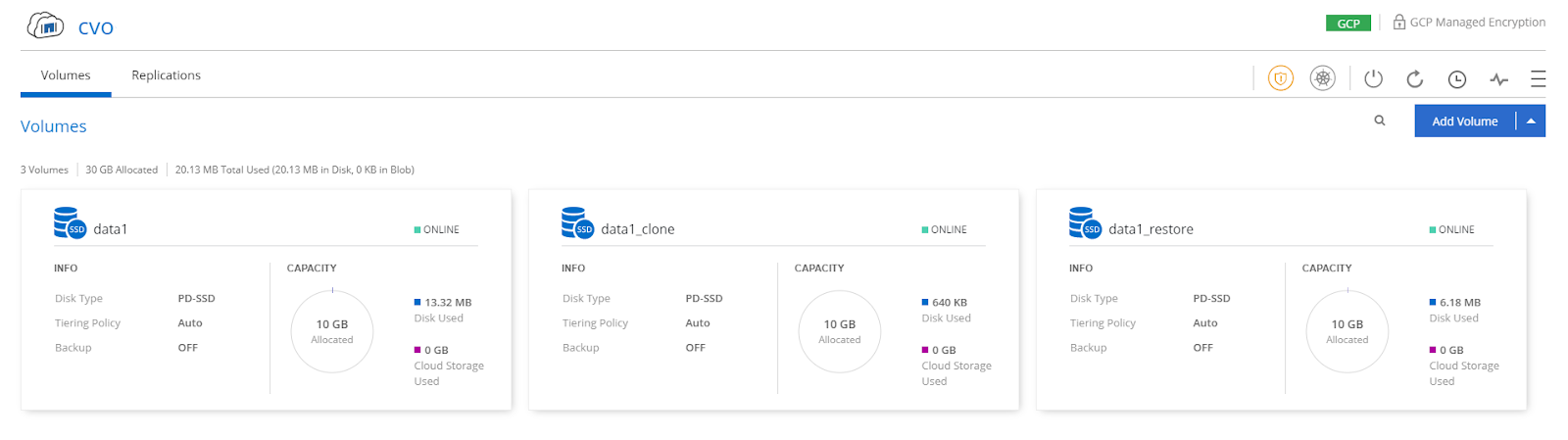

On the Clone page, use the default Clone Volume Name and click Clone.

When cloning is completed, the volume's page should look like this:

Same as restore, cloning is instant regardless of the volume's size or used capacity without any data copying. Now, let's access the cloned volume.

-

Click on the triple-bar of data1_clone volume. From the list of operations revealed, click on Mount Command and then click on Copy to copy the command to your clipboard.

-

In the linux VM SSH window, create a local directory under

/mntnameddata1_clonethat would serve as a mount point:

- Next, paste the Mount Command (copied on step 3) and replace

<dest_dir>with the/mnt/data1_clonedirectory created. As well, add-o vers=3to the command to use NFS v3. Press ENTER to mount the cloned volume.

- Verify that your volume is properly mounted and list its content using the following native Linux commands:

The clone operation creates a new snapshot which represents the current state of the volume, after the single file restore. Therefore, two files named cvo.google and cvo.google.v1 appear.

Instantly cloning a volume, checked! Ready to try something new?

Cross-Cloud / cross-region disaster recovery

Any ONTAP system, including Cloud Volumes ONTAP for Google Cloud include the NetApp SnapMirror data replication engine. With SnapMirror you can replicate data between working environments, located on BlueXP's Storage Canvas. SnapMirror is a highly efficient data transfer engine that replicates NetApp Snapshots, while preserving storage efficiencies (deduplication & compression) from one ONTAP system to another enabling cross-region and cross-cloud replication. SnapMirror use cases include: disaster recovery, long-term retention and migration.

BlueXP simplifies data replication between volumes on separate systems using SnapMirror. BlueXP purchases the required disks, configures relationships, applies the replication policy, and then initiates the baseline transfer between volumes. All you have to do is a drag-and-drop operation of one working environment over the other to identify the source volume and the destination volume, and then choose a replication policy and schedule.

Together with local Snapshots, backup to Google Cloud Storage, and SnapMirror a full data protection strategy can be easily implemented.

- Check out the Cross-Region Storage Failover and Failback with Cloud Volumes ONTAP blog post to see how it's done.

Ransomware prevention (optional)

FPolicy is a file access notification framework that is used to monitor and manage file access events on Cloud Volumes ONTAP. FPolicy can operate in two modes. One mode uses external FPolicy servers to process and act upon notifications. The other uses the internal, native FPolicy server for simple file blocking based on extensions. Both act as a proactive preventive solution to limit ransomware and other malware infections. In this section you will enable native FPolicy server.

-

Go back to BlueXP and double click on Cloud Volumes ONTAP working environment to enter the Volumes page.

-

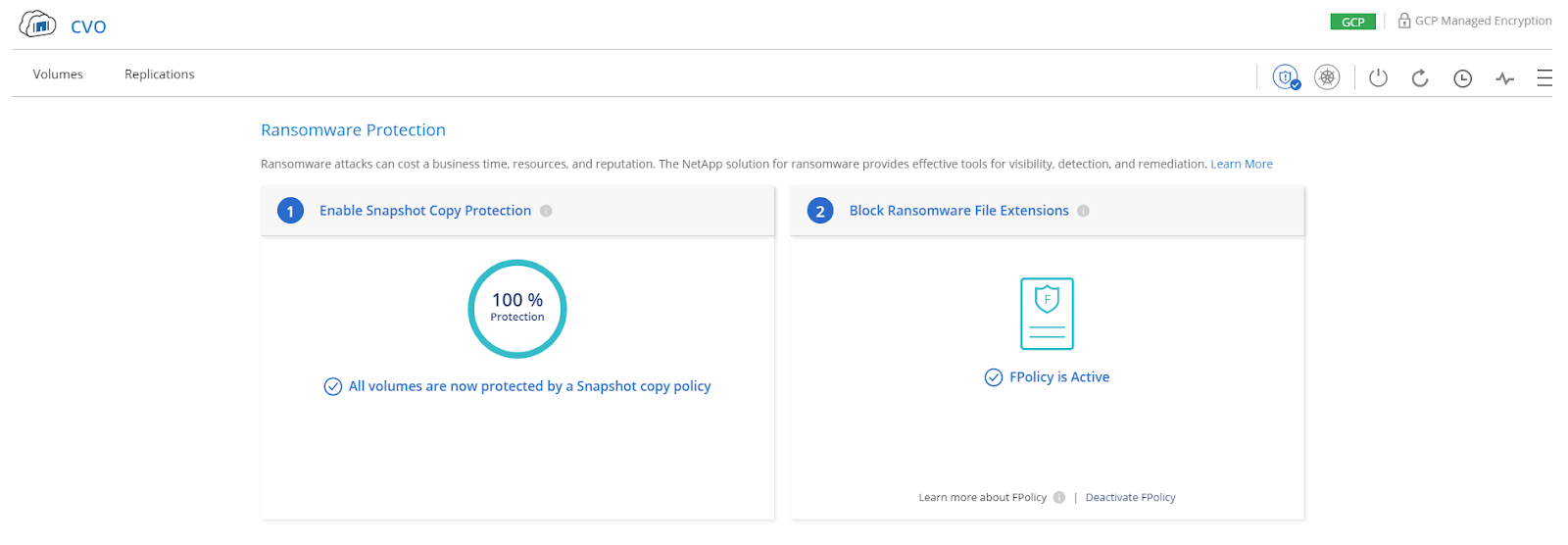

On the right side menu of the page, locate the orange colored shield (Ransomware Protection) and click it.

-

On the Ransomware Protection page, click Activate FPolicy.

Once the status has changed the page would look like this, and the shield will be colored blue (it takes a few seconds for the status to change - you can click on Volumes and again on the shield accelerate it).

- Test this: In the linux VM SSH window, try to create a file with a well-known ransomware extension, in the data1 volume using the following command:

The FPolicy engine prevents file operations on files with an extension that appears in the blocking policy. Therefore you should see a permission denied message like the following:

Great! By enabling FPolicy, BlueXP created a policy that blocks any file operation done through NFS and SMB clients on files with known ransomware extensions. And remember, NetApp Snapshots are immutable, so in case there was a security breach and ransomware wreaked havoc, you can easily and instantly recover your data to a point-in-time (like you did in Restoring From NetApp Snapshots section).

Interested in knowing more? check out this guidebook.

Congratulations!

In this lab, you honed your NetApp Cloud solutions skills by learning how your mission-critical data can be fully protected using Cloud Volume ONTAP and BlueXP. You have also learned how to manage and leverage NetApp Snapshots and strengthen your anti-ransomware posture. In addition, you have learned through the Disaster Recovery video, how you can replicate data cross-cloud and cross-region for disaster recovery scenarios.

Next steps / Learn more

Be sure to check out the following to receive more hands-on practice with NetApp:

- NetApp on the Google Cloud Marketplace!

- Get started with your 30-days Cloud Volumes ONTAP free trial

Google Cloud training and certification

...helps you make the most of Google Cloud technologies. Our classes include technical skills and best practices to help you get up to speed quickly and continue your learning journey. We offer fundamental to advanced level training, with on-demand, live, and virtual options to suit your busy schedule. Certifications help you validate and prove your skill and expertise in Google Cloud technologies.

Manual Last Updated July 28, 2023

Lab Last Tested July 28, 2023

Copyright 2024 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.