检查点

Create a HTTP Function

/ 10

Create a Cloud Storage Function

/ 10

Create a Cloud Audit Logs Function

/ 10

Create a VM Instance

/ 10

Deploy different revisions

/ 20

Set up minimum instances

/ 20

Create a function with concurrency

/ 20

Cloud Functions 2nd Gen: Qwik Start

- GSP1089

- Overview

- Objectives

- Setup and requirements

- Task 1. Enable APIs

- Task 2. Create an HTTP function

- Task 3. Create a Cloud Storage function

- Task 4. Create a Cloud Audit Logs function

- Task 5. Deploy different revisions

- Task 6. Set up minimum instances

- Task 7. Create a function with concurrency

- Congratulations!

GSP1089

Overview

Cloud Functions (2nd gen) is the next version of Google Cloud Functions, Google Cloud's Functions-as-a-Service offering. This new version comes with an advanced feature set and is now powered by Cloud Run and Eventarc, giving you more advanced control over performance and scalability, and more control around the functions runtime and triggers from over 90+ event sources.

In this lab, you will create Cloud Functions that respond to HTTP calls, and get triggered by Cloud Storage events and Cloud Audit Logs. You will also deploy multiple revisions of a Cloud Function and explore new settings.

What's New?

This new version of Cloud Functions provides an enhanced FaaS experience powered by Cloud Run, Cloud Build, Artifact Registry, and Eventarc.

Enhanced Infrastructure

- Longer request processing: Run your Cloud Functions longer than the 5 minute default, making it easier to run longer request workloads such as processing large streams of data from Cloud Storage or BigQuery. For HTTP functions, this is up to 60 minutes. For event-driven functions, this is currently up to 10 minutes.

- Larger instances: Take advantage of up to 16GB of RAM and 4 vCPUs on Cloud Functions allowing larger in-memory, compute-intensive and more parallel workloads.

- Concurrency: Process up to 1000 concurrent requests with a single function instance, minimizing cold starts and improving latency when scaling.

- Minimum instances: Provide for pre-warmed instances to cut your cold starts and make sure the bootstrap time of your application does not impact the application performance.

- Traffic splitting: Support multiple versions of your functions, split traffic between different versions and roll your function back to a prior version.

Broader Event coverage and CloudEvents support

- Eventarc Integration: Cloud Functions now includes native support for Eventarc, which brings over 125+ event sources using Cloud Audit logs (BigQuery, Cloud SQL, Cloud Storage...), and of course Cloud Functions still supports events from custom sources by publishing to Cloud Pub/Sub directly.

- CloudEvent format: All event-driven functions adhere to industry standard CloudEvents (cloudevents.io), regardless of the source, to ensure a consistent developer experience. The payloads are sent via a structured CloudEvent with a cloudevent.data payload and implement the CloudEvent standard.

Objectives

In this lab, you will:

- Write a function that responds to HTTP calls.

- Write a function that responds to Cloud Storage events.

- Write a function that responds to Cloud Audit Logs.

- Deploy multiple revisions of a Cloud Function.

- Get rid of cold starts with minimum instances.

- Set up concurrency

Setup and requirements

Before you click the Start Lab button

Read these instructions. Labs are timed and you cannot pause them. The timer, which starts when you click Start Lab, shows how long Google Cloud resources will be made available to you.

This hands-on lab lets you do the lab activities yourself in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials that you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

- Time to complete the lab---remember, once you start, you cannot pause a lab.

How to start your lab and sign in to the Google Cloud console

-

Click the Start Lab button. If you need to pay for the lab, a pop-up opens for you to select your payment method. On the left is the Lab Details panel with the following:

- The Open Google Cloud console button

- Time remaining

- The temporary credentials that you must use for this lab

- Other information, if needed, to step through this lab

-

Click Open Google Cloud console (or right-click and select Open Link in Incognito Window if you are running the Chrome browser).

The lab spins up resources, and then opens another tab that shows the Sign in page.

Tip: Arrange the tabs in separate windows, side-by-side.

Note: If you see the Choose an account dialog, click Use Another Account. -

If necessary, copy the Username below and paste it into the Sign in dialog.

{{{user_0.username | "Username"}}} You can also find the Username in the Lab Details panel.

-

Click Next.

-

Copy the Password below and paste it into the Welcome dialog.

{{{user_0.password | "Password"}}} You can also find the Password in the Lab Details panel.

-

Click Next.

Important: You must use the credentials the lab provides you. Do not use your Google Cloud account credentials. Note: Using your own Google Cloud account for this lab may incur extra charges. -

Click through the subsequent pages:

- Accept the terms and conditions.

- Do not add recovery options or two-factor authentication (because this is a temporary account).

- Do not sign up for free trials.

After a few moments, the Google Cloud console opens in this tab.

Activate Cloud Shell

Cloud Shell is a virtual machine that is loaded with development tools. It offers a persistent 5GB home directory and runs on the Google Cloud. Cloud Shell provides command-line access to your Google Cloud resources.

- Click Activate Cloud Shell

at the top of the Google Cloud console.

When you are connected, you are already authenticated, and the project is set to your Project_ID,

gcloud is the command-line tool for Google Cloud. It comes pre-installed on Cloud Shell and supports tab-completion.

- (Optional) You can list the active account name with this command:

- Click Authorize.

Output:

- (Optional) You can list the project ID with this command:

Output:

gcloud, in Google Cloud, refer to the gcloud CLI overview guide.

Task 1. Enable APIs

Before you create the Cloud Functions, you will need to enable the relevant APIs.

- In Cloud Shell, run the following command to set your Project ID variable.

- Run the following command to set the Region variable.

- Execute the following command to enable all necessary services.

Task 2. Create an HTTP function

For the first function, you will create an authenticated Node.js function that responds to HTTP requests, and use a 10 minute timeout to showcase how a function can have more time to respond to HTTP requests.

Create

- Run the following command to create the folder and files for the app and navigate to the folder:

-

Click the Open Editor button on the toolbar of Cloud Shell. (You can switch between Cloud Shell and the code editor by using the Open Editor and Open Terminal icons as required, or click the Open in new window button to leave the Editor open in a separate tab).

-

In the Editor, add the following code to the

hello-http/index.jsfile that simply responds to HTTP requests:

- Add the following content to the

hello-http/package.jsonfile to specify the dependencies.

Deploy

- In Cloud Shell, run the following command to deploy the function:

permissions error, please wait a few minutes and try the deployment again. It takes a few minutes for the APIs to be enabled.

Although not strictly necessary for this step, there is a timeout of 600 seconds. This allows the function to have a longer timeout to respond to HTTP requests.

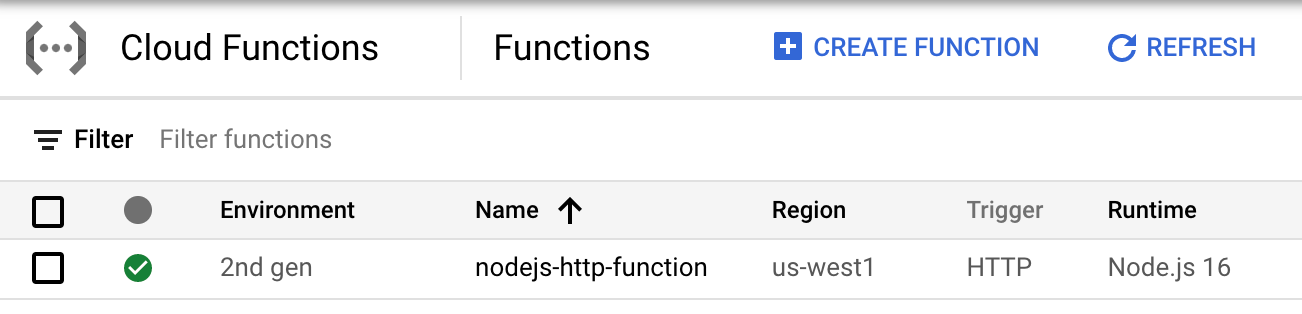

- Once the function is deployed, from the Navigation Menu go to the Cloud Functions page. Verify the function was deployed successfully.

Test

- Test the function with the following command:

You should see the following message as a response:

Click Check my progress to verify the objective.

Task 3. Create a Cloud Storage function

In this section create a Node.js function that responds to events from a Cloud Storage bucket.

Setup

- To use Cloud Storage functions, first grant the

pubsub.publisherIAM role to the Cloud Storage service account:

Create

- Run the following command to create the folder and files for the app and navigate to the folder:

- Add the following code to the

hello-storage/index.jsfile that simply responds to Cloud Storage events:

- Add the following content to the

hello-storage/package.jsonfile to specify the dependencies:

Deploy

- First, create a Cloud Storage bucket to use for creating events:

- Deploy the function:

- Once the function is deployed, verify that you can see it under the Cloud Functions section of the Cloud Console.

Test

- Test the function by uploading a file to the bucket:

- Run the following command. You should see the received CloudEvent in the logs:

You should see output similar to the following:

Click Check my progress to verify the objective.

Task 4. Create a Cloud Audit Logs function

In this section, you will create a Node.js function that receives a Cloud Audit Log event when a Compute Engine VM instance is created. In response, it adds a label to the newly created VM, specifying the creator of the VM.

Determine newly created Compute Engine VMs

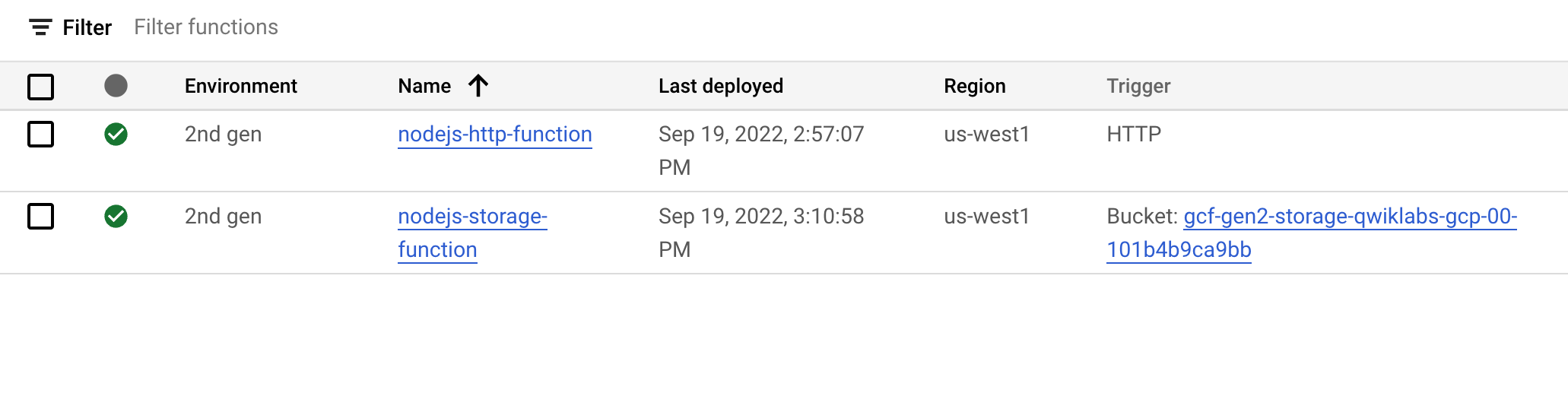

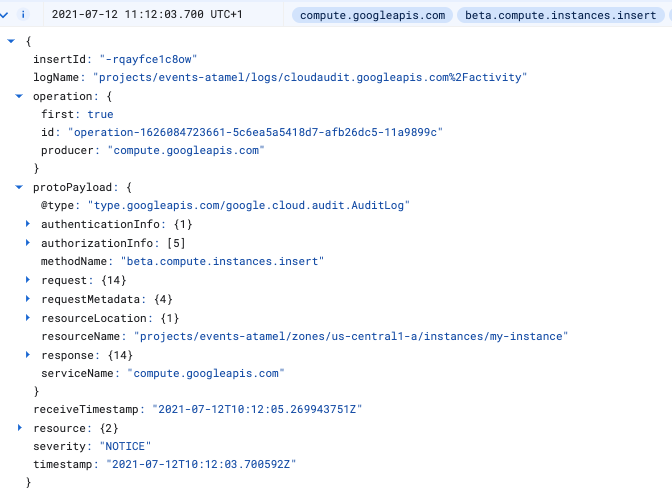

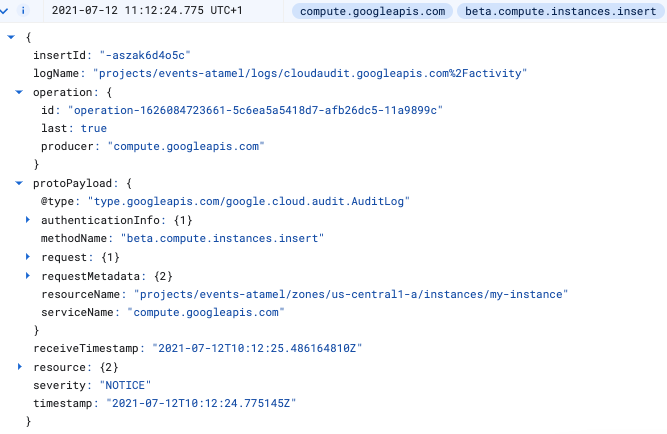

Compute Engine emits 2 Audit Logs when a VM is created.

The first one is emitted at the beginning of the VM creation and looks like this:

The second one is emitted after the VM creation and looks like this:

Notice the operation field with the first: true and last: true values. The second Audit Log contains all the information you need to label an instance, therefore you will use the last: true flag to detect it in Cloud Functions.

Setup

To use Cloud Audit Log functions, you must enable Audit Logs for Eventarc. You also need to use a service account with the eventarc.eventReceiver role.

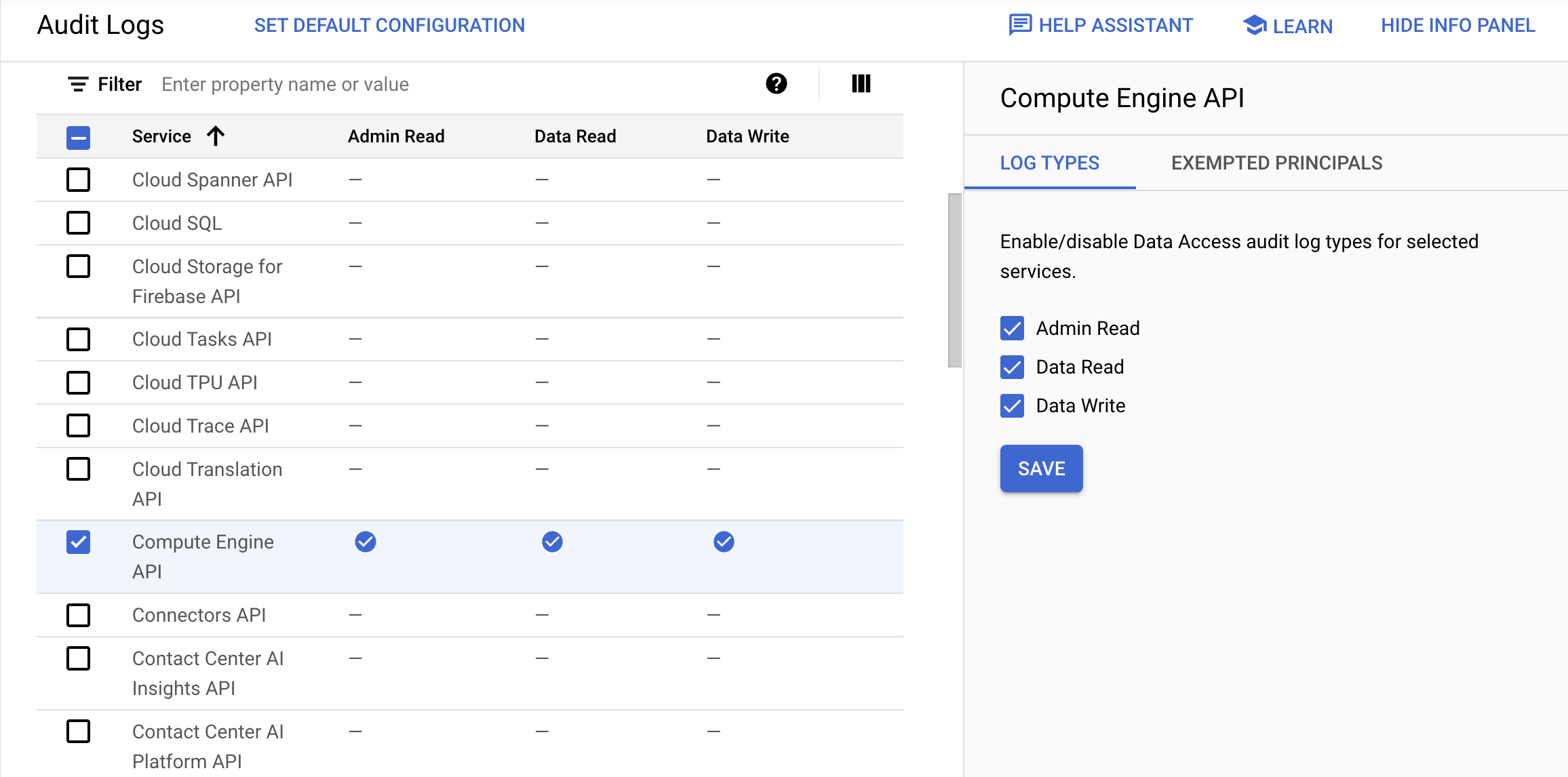

- From the Navigation Menu, go to IAM & Admin > Audit Logs.

resourcemanager.folders.getIamPolicy permission warning.

-

Find the Compute Engine API and click the check box next to it. If you are unable to find the API, search it on next page.

-

On the info pane on the right, check Admin Read, Data Read, and Data Write log types and click Save.

- Grant the default Compute Engine service account the

eventarc.eventReceiverIAM role:

Get the code

- Run the following code to clone the repo that contains the application:

- Navigate to the app directory:

The index.js file contains the application code that receives the Audit Log wrapped into a CloudEvent. It then extracts the Compute Engine VM instance details and sets a label on the VM instance. Feel free to study index.js in more detail on your own.

Deploy

- Deploy the function with

gcloudas before. Notice how the function is filtering on Audit Logs for Compute Engine insertions with the--trigger-event-filtersflag:

Click Check my progress to verify the objective.

Test

To test your Audit Log function, you need to create a Compute Engine VM in the Cloud Console (You can also create VMs with gcloud but it does not seem to generate Audit Logs).

-

From the Navigation Menu, go to Compute Engine > VM instances.

-

Click Create Instance.

-

Leave all of the fields as the default values and click Create.

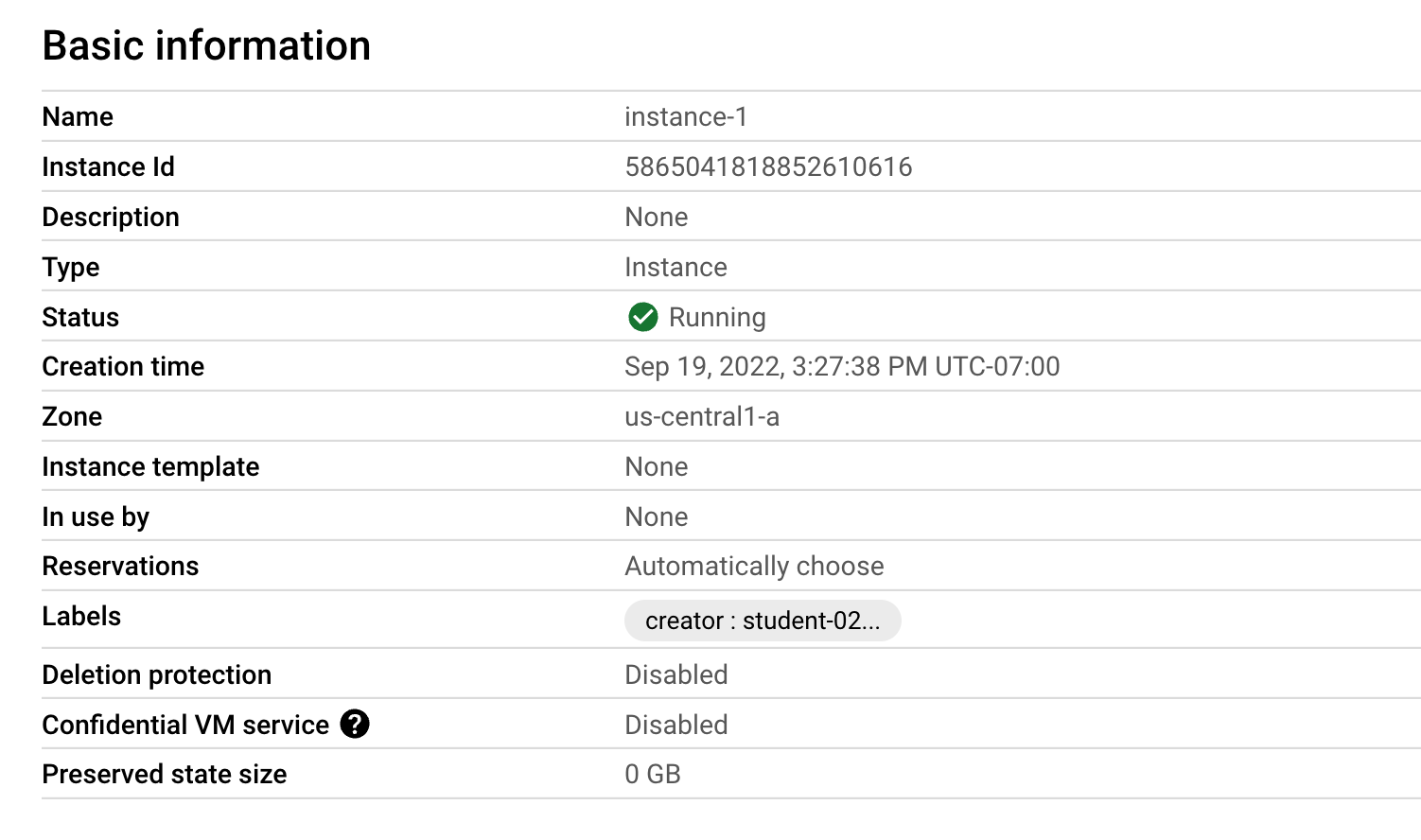

Once the VM creation completes, you should see the added creator label on the VM in the Cloud Console in the Basic information section.

- Verify using the following command:

instance-1 and is in the You should see the label in the output like the following example:

Click Check my progress to verify the objective.

- Run the following command to delete the VM. Type

Ywhen prompted to confirm.

Task 5. Deploy different revisions

Cloud Functions (2nd gen) supports multiple revisions of your functions, as well as splitting traffic between different revisions and rolling your function back to a prior version. This is possible because 2nd gen functions are Cloud Run services under the hood. In this section, you will deploy two revisions of your Cloud Function.

Create

- Run the following command to create the folder and files for the app and navigate to the folder:

- Add the following code to the

hello-world-colored/main.pyfile with a Python function that reads a color environment variable and responds back withHello Worldin that background color:

Deploy

- Deploy the first revision of the function with an orange background:

At this point, if you test the function by viewing the HTTP trigger (the URI output of the above deployment command) in your browser, you should see Hello World with an orange background:

-

Navigate to the Cloud Functions page in the Console and click the hello-world-colored function.

-

On the top right of the page under Powered by Cloud Run click hello-world-colored.

This will re-direct you to the Cloud Run service page.

-

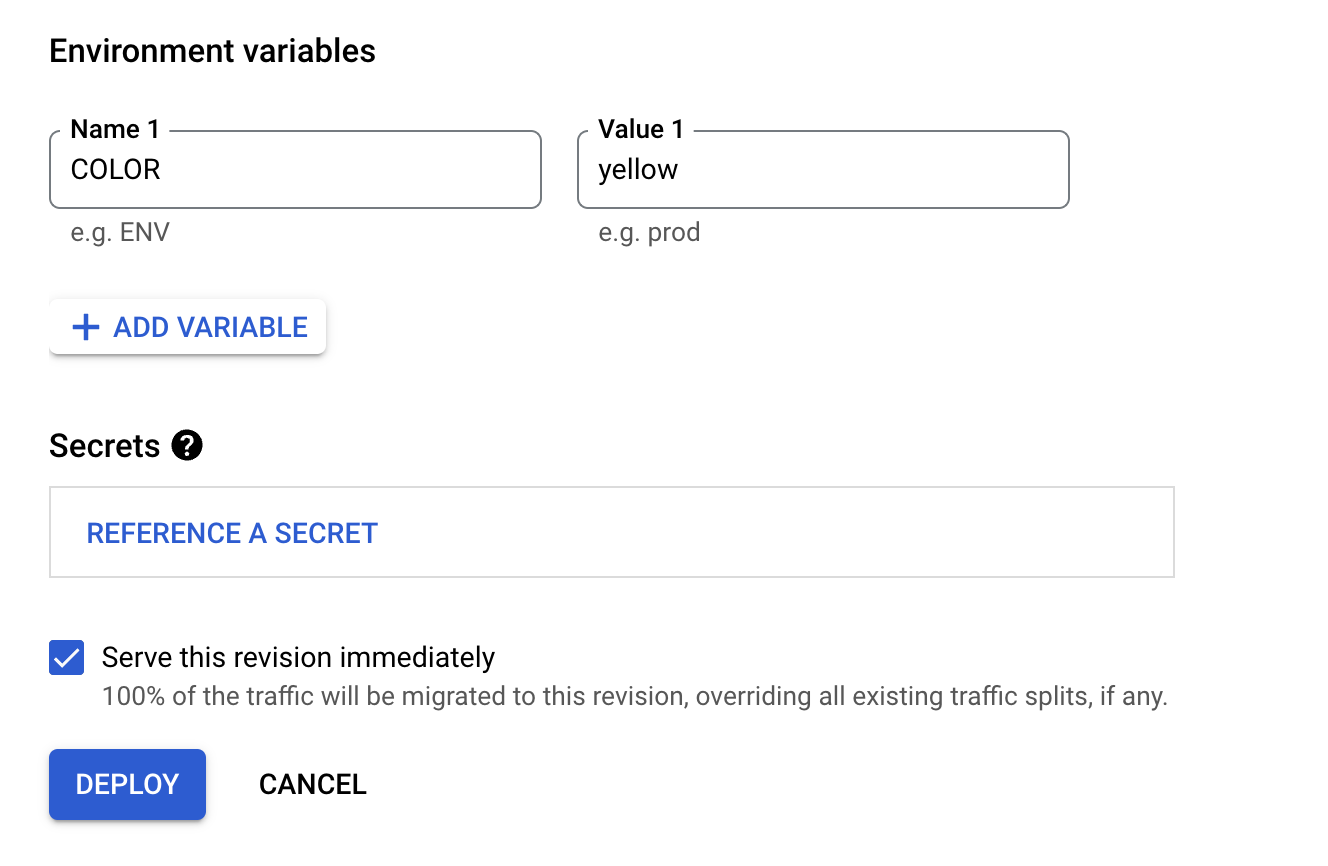

Click the Revisions tab and then select Edit & Deploy New Revision.

-

Leave everything as default and scroll down and select Variables & Secrets tab and in Environment Variables section. Update the COLOR environment variable to

yellow.

- Click Deploy.

Since this is the latest revision, if you test the function, you should see Hello World with a yellow background:

Click Check my progress to verify the objective.

Task 6. Set up minimum instances

In Cloud Functions (2nd gen), one can specify a minimum number of function instances to be kept warm and ready to serve requests. This is useful in limiting the number of cold starts. In this section, you will deploy a function with slow initialization. You'll observe the cold start problem. Then, you will deploy the function with the minimum instance value set to 1 to get rid of the cold start.

Create

- Run the following command to create the folder and files for the app and navigate to the folder:

- Add the following code to the

min-instances/main.gofile. This Go service has aninitfunction that sleeps for 10 seconds to simulate a long initialization. It also has aHelloWorldfunction that responds to HTTP calls:

- Add the following code to the

min-instances/go.modfile. This specifies the module path and Go language version:

Deploy

- Run the following command to deploy the first revision of the function with the default minimum instance value of zero:

- Test the function with this command:

You should observe a 10 second delay (cold start) on the first call and then see the message. Subsequent calls should return immediately.

Set minimum instances

To get rid of the cold start on the first request, redeploy the function with the --min-instances flag set to 1 as follows:

-

Navigate to the Cloud Run page in the Console and click the slow-function service.

-

Click the Revisions tab and then select Edit & Deploy New Revision.

-

Under the Autoscaling section, set Minimum number of instances to

1and Maximum number of instances to4. -

Leave the rest of the fields as default and click Deploy.

Test

- Test the function again:

You should not see the 10 second delay anymore in the first request. The cold start problem for the first invocation (after a long time without) is gone, thanks to minimum instances! See using minimum instances for more information.

Click Check my progress to verify the objective.

Task 7. Create a function with concurrency

In Cloud Functions (2nd gen), a function instance handles 1 concurrent request by default but you can specify the number of concurrent requests that can be processed simultaneously by an instance. This can also be useful in preventing cold starts as a new function instance does not need to be created for every parallel request.

In this section, you will use the function with slow initialization from the previous step. You will send it 10 requests and observe the cold start problem again as new function instances need to be created to handle the requests.

To fix the cold-start problem, you will deploy another function with a concurrency value of 100. You will observe that the 10 requests now do not cause the cold start problem and a single function instance can handle all the requests.

Test without concurrency

- Run the following command to get the URL of the function and save it as an environment variable:

- Use an open source benchmarking tool called

heyto send 10 concurrent requests to the slow function.heyis already installed in Cloud Shell:

You should see in the output of hey that some requests are taking long:

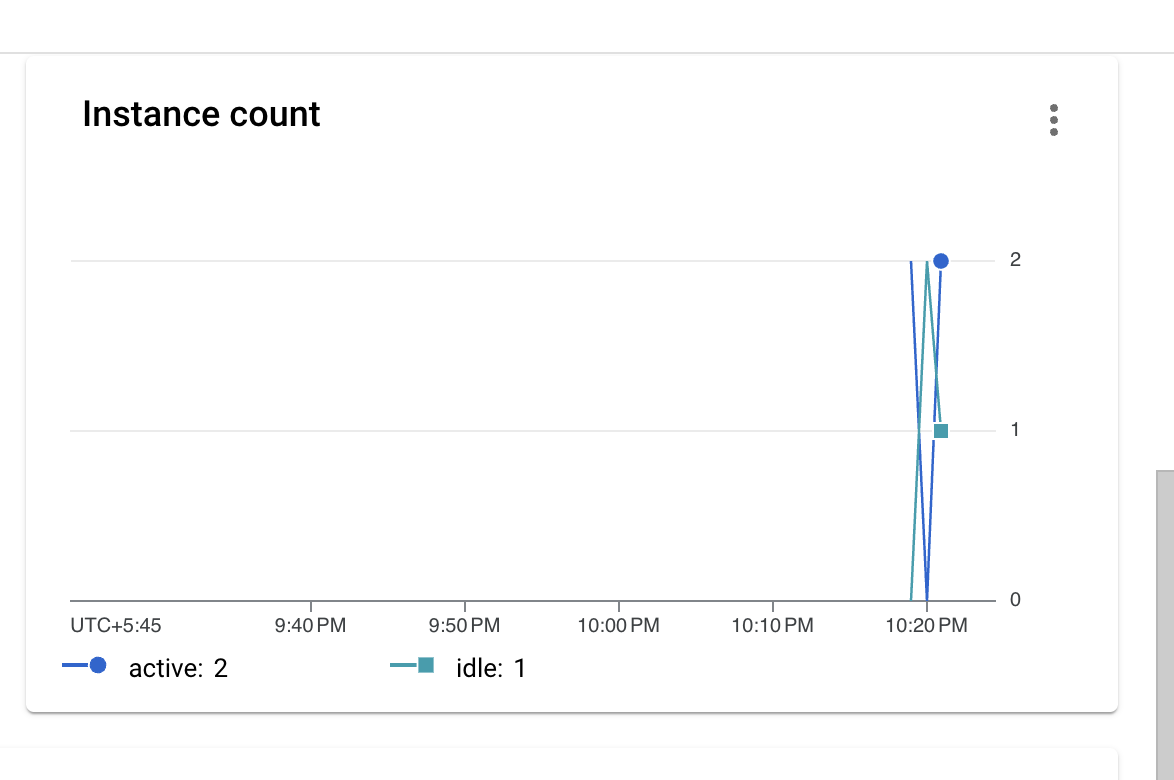

This is because more function instances are being created to handle the requests. If you check the active instances count for the function, you should also see that more than one instance was created as some point and these are causing the cold start problem:

- Run the following command to delete the function. Type

Ywhen prompted to confirm.

Deploy

- Deploy a new function identical to the previous function. Once deployed, you will increase its concurrency:

Set concurrency

Now you will set the concurrency of the underlying Cloud Run service for the function to 100 (it can be maximum 1000). This ensures that at least 100 requests can be handled by a single function instance.

-

From the Navigation Menu, go to Cloud Run.

-

Click the slow-concurrent-function service.

-

Click the Revisions tab and then select Edit & Deploy New Revision.

-

Under the Resources section, set the CPU to

1. -

Under Requests, set the Maximum concurrent requests per instance to

100. -

Under Autoscaling, set the Maximum number of instances to

4. -

Leave the rest of the fields as default and click Deploy.

Test with concurrency

- Once your function has deployed, run the following command to get the URL of the new function and save it as an environment variable:

- Now use

heyto send 10 concurrent requests:

You should see in the output of hey that all requests are processed quickly:

A single function instance was able to handle all the requests and the cold start problem is gone, thanks to the increased concurrency! See concurrency for more information.

Click Check my progress to verify the objective.

Congratulations!

In this lab, you deployed multiple 2nd Gen Cloud Functions that responded to HTTP Calls, Cloud Storage Events, and Cloud Audit Logs. You then deployed multiple revisions of the Cloud Run services running the functions and set concurrency values and got rid of cold starts with minimum instances.

Next Steps / Learn More

For more information about Cloud Functions 2nd Gen, check out the following:

- Cloud Functions 2nd Gen Blog Post

- Cloud Functions version comparison

- Getting Started with Cloud Functions 2nd Gen Codelab

Google Cloud training and certification

...helps you make the most of Google Cloud technologies. Our classes include technical skills and best practices to help you get up to speed quickly and continue your learning journey. We offer fundamental to advanced level training, with on-demand, live, and virtual options to suit your busy schedule. Certifications help you validate and prove your skill and expertise in Google Cloud technologies.

Manual Last Updated March 06, 2024

Lab Last Tested March 06, 2024

Copyright 2024 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.